About the CoMSES Model Library more info

Our mission is to help computational modelers at all levels engage in the establishment and adoption of community standards and good practices for developing and sharing computational models. Model authors can freely publish their model source code in the Computational Model Library alongside narrative documentation, open science metadata, and other emerging open science norms that facilitate software citation, reproducibility, interoperability, and reuse. Model authors can also request peer review of their computational models to receive a DOI.

All users of models published in the library must cite model authors when they use and benefit from their code.

Please check out our model publishing tutorial and contact us if you have any questions or concerns about publishing your model(s) in the Computational Model Library.

We also maintain a curated database of over 7500 publications of agent-based and individual based models with additional detailed metadata on availability of code and bibliometric information on the landscape of ABM/IBM publications that we welcome you to explore.

Displaying 10 of 326 results for "Tim Dorscheidt" clear search

PA/C model with affinity

N. Leticia Abrica-Jacinto Evguenii Kurmyshev Héctor Juárez | Published Thursday, October 27, 2016We expose RA agent-based model of the opinion and tolerance dynamics in artificial societies. The formal mathematical model is based on the ideas of Social Influence, Social Judgment, and Social Identity theories.

SMILI: Small-scale fisheries Institutions and Local Interactions

Emilie Lindkvist Xavier Basurto Maja Schlüter | Published Thursday, March 09, 2017The model represents an archetypical fishery in a co-evolutionary social-ecological environment, capturing different dimensions of trust between fishers and fish buyers for the establishment and persistence of self-governance arrangements.

COOPER - Flood impacts over Cooperative Winemaking Systems

David Nortes-Martinez David Nortes Martinez | Published Thursday, February 08, 2018 | Last modified Friday, March 22, 2019The model simulates flood damages and its propagation through a cooperative, productive, farming system, characterized as a star-type network, where all elements in the system are connected one to each other through a central element.

Peer reviewed COMMAND-AND-CONTROL

Farzaneh Davari | Published Tuesday, September 10, 2019 | Last modified Thursday, September 12, 2019The command and control policy in natural resource management, including water resources, is a longstanding established policy that has been theoretically and practically argued from the point of view of social-ecological complex systems. With the intention of making a system ecologically resilient, these days, policymakers apply the top-down policies of controlling communities through regulations. To explore how these policies may work and to understand whether the ecological goal can be achieved via command and control policy, this research uses the capacity of Agent-Based Modeling (ABM) as an experimental platform in the Urmia Lake Basin (ULB) in Iran, which is a social-ecological complex system and has gone through a drought process.

Despite the uncertainty of the restorability capacity of the lake, there has been a consensus on the possibility to artificially restore the lake through the nationally managed Urmia Lake Restoratoin Program (ULRP). To reduce water consumption in the Basin, the ULRP widely targets the agricultural sector and proposes the project of changing crop patterns from high-water-demand (HWD) to low-water-demand (LWD), which includes a component to control water consumption by establishing water-police forces.

Using a wide range of multidisciplinary studies about Urmia Lake at the Basin and sub-basins as well as qualitative information at micro-level as the main conceptual sources for the ABM, the findings under different strategies indicate that targeting crop patterns change by legally limiting farmers’ access to water could force farmers to change their crop patterns for a short period of time as long as the number of police constantly increases. However, it is not a sustainable policy for either changing the crop patterns nor restoring the lake.

An ABM of historic British milk consumption

Matthew Gibson | Published Monday, December 20, 2021Substitution of food products will be key to realising widespread adoption of sustainable diets. We present an agent-based model of decision-making and influences on food choice, and apply it to historically observed trends of British whole and skimmed (including semi) milk consumption from 1974 to 2005. We aim to give a plausible representation of milk choice substitution, and test different mechanisms of choice consideration. Agents are consumers that perceive information regarding the two milk choices, and hold values that inform their position on the health and environmental impact of those choices. Habit, social influence and post-decision evaluation are modelled. Representative survey data on human values and long-running public concerns empirically inform the model. An experiment was run to compare two model variants by how they perform in reproducing these trends. This was measured by recording mean weekly milk consumption per person. The variants differed in how agents became disposed to consider alternative milk choices. One followed a threshold approach, the other was probability based. All other model aspects remained unchanged. An optimisation exercise via an evolutionary algorithm was used to calibrate the model variants independently to observed data. Following calibration, uncertainty and global variance-based temporal sensitivity analysis were conducted. Both model variants were able to reproduce the general pattern of historical milk consumption, however, the probability-based approach gave a closer fit to the observed data, but over a wider range of uncertainty. This responds to, and further highlights, the need for research that looks at, and compares, different models of human decision-making in agent-based and simulation models. This study is the first to present an agent-based modelling of food choice substitution in the context of British milk consumption. It can serve as a valuable pre-curser to the modelling of dietary shift and sustainable product substitution to plant-based alternatives in Britain.

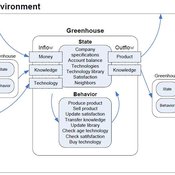

The various technologies used inside a Dutch greenhouse interact in combination with an external climate, resulting in an emergent internal climate, which contributes to the final productivity of the greenhouse. This model examines how differing technology development styles affects the overall ability of a community of growers to approach the theoretical maximum yield.

Universal Darwinism in Dutch Greenhouses

Julia Kasmire | Published Wednesday, May 09, 2012 | Last modified Saturday, April 27, 2013An ABM, derived from a case study and a series of surveys with greenhouse growers in the Westland, Netherlands. Experiments using this model showshow that the greenhouse horticulture industry displays diversity, adaptive complexity and an uneven distribution, which all suggest that the industry is an evolving system.

02 OamLab V1.10 - Open Atwood Machine Laboratory

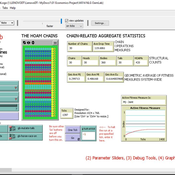

Garvin Boyle | Published Saturday, January 31, 2015 | Last modified Thursday, April 13, 2017Using chains of replicas of Atwood’s Machine, this model explores implications of the Maximum Power Principle. It is one of a series of models exploring the dynamics of sustainable economics – PSoup, ModEco, EiLab, OamLab, MppLab, TpLab, EiLab.

05 CmLab V1.17 – Conservation of Money Laboratory

Garvin Boyle | Published Saturday, April 15, 2017In CmLab we explore the implications of the phenomenon of Conservation of Money in a modern economy. This is one of a series of models exploring the dynamics of sustainable economics – PSoup, ModEco, EiLab, OamLab, MppLab, TpLab, CmLab.

Talent vs Luck: the role of randomness in success and failure

Alessandro Pluchino Alessio Emanuele Biondo Andrea Rapisarda | Published Monday, July 16, 2018The largely dominant meritocratic paradigm of highly competitive Western cultures is rooted on the belief that success is due mainly, if not exclusively, to personal qualities such as talent, intelligence, skills, smartness, efforts, willfulness, hard work or risk taking. Sometimes, we are willing to admit that a certain degree of luck could also play a role in achieving significant material success. But, as a matter of fact, it is rather common to underestimate the importance of external forces in individual successful stories. It is very well known that intelligence (or, more in general, talent and personal qualities) exhibits a Gaussian distribution among the population, whereas the distribution of wealth - often considered a proxy of success - follows typically a power law (Pareto law), with a large majority of poor people and a very small number of billionaires. Such a discrepancy between a Normal distribution of inputs, with a typical scale (the average talent or intelligence), and the scale invariant distribution of outputs, suggests that some hidden ingredient is at work behind the scenes. In a recent paper, with the help of this very simple agent-based model realized with NetLogo, we suggest that such an ingredient is just randomness. In particular, we show that, if it is true that some degree of talent is necessary to be successful in life, almost never the most talented people reach the highest peaks of success, being overtaken by mediocre but sensibly luckier individuals. As to our knowledge, this counterintuitive result - although implicitly suggested between the lines in a vast literature - is quantified here for the first time. It sheds new light on the effectiveness of assessing merit on the basis of the reached level of success and underlines the risks of distributing excessive honors or resources to people who, at the end of the day, could have been simply luckier than others. With the help of this model, several policy hypotheses are also addressed and compared to show the most efficient strategies for public funding of research in order to improve meritocracy, diversity and innovation.

Displaying 10 of 326 results for "Tim Dorscheidt" clear search