About the CoMSES Model Library more info

Our mission is to help computational modelers at all levels engage in the establishment and adoption of community standards and good practices for developing and sharing computational models. Model authors can freely publish their model source code in the Computational Model Library alongside narrative documentation, open science metadata, and other emerging open science norms that facilitate software citation, reproducibility, interoperability, and reuse. Model authors can also request peer review of their computational models to receive a DOI.

All users of models published in the library must cite model authors when they use and benefit from their code.

Please check out our model publishing tutorial and contact us if you have any questions or concerns about publishing your model(s) in the Computational Model Library.

We also maintain a curated database of over 7500 publications of agent-based and individual based models with additional detailed metadata on availability of code and bibliometric information on the landscape of ABM/IBM publications that we welcome you to explore.

Displaying 10 of 745 results for "Jon Norberg" clear search

Simulation Experiments of "The dynamics of corruption under an optional external supervision service"

Xin Zhou | Published Wednesday, June 21, 2023The simulation experiment is for studying the influence of external supervision services on combating corruption.

Algorithm: evolutionary game theory

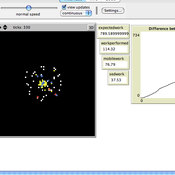

06b EiLab_Model_I_V5.00 NL

Garvin Boyle | Published Saturday, October 05, 2019EiLab - Model I - is a capital exchange model. That is a type of economic model used to study the dynamics of modern money which, strangely, is very similar to the dynamics of energetic systems. It is a variation on the BDY models first described in the paper by Dragulescu and Yakovenko, published in 2000, entitled “Statistical Mechanics of Money”. This model demonstrates the ability of capital exchange models to produce a distribution of wealth that does not have a preponderance of poor agents and a small number of exceedingly wealthy agents.

This is a re-implementation of a model first built in the C++ application called Entropic Index Laboratory, or EiLab. The first eight models in that application were labeled A through H, and are the BDY models. The BDY models all have a single constraint - a limit on how poor agents can be. That is to say that the wealth distribution is bounded on the left. This ninth model is a variation on the BDY models that has an added constraint that limits how wealthy an agent can be? It is bounded on both the left and right.

EiLab demonstrates the inevitable role of entropy in such capital exchange models, and can be used to examine the connections between changing entropy and changes in wealth distributions at a very minute level.

…

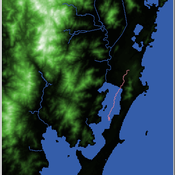

Shellmound Mobility

Henrique de Sena Kozlowski | Published Saturday, June 15, 2024Least Cost Path (LCP) analysis is a recurrent theme in spatial archaeology. Based on a cost of movement image, the user can interpret how difficult it is to travel around in a landscape. This kind of analysis frequently uses GIS tools to assess different landscapes. This model incorporates some aspects of the LCP analysis based on GIS with the capabilities of agent-based modeling, such as the possibility to simulate random behavior when moving. In this model the agent will travel around the coastal landscape of Southern Brazil, assessing its path based on the different cost of travel through the patches. The agents represent shellmound builders (sambaquieiros), who will travel mainly through the use of canoes around the lagoons.

How it works?

When the simulation starts the hiker agent moves around the world, a representation of the lagoon landscape of the Santa Catarina state in Southern Brazil. The agent movement is based on the travel cost of each patch. This travel cost is taken from a cost surface raster created in ArcMap to represent the different cost of movement around the landscape. Each tick the agent will have a chance to select the best possible patch to move in its Field of View (FOV) that will take it towards its target destination. If it doesn’t select the best possible patch, it will randomly choose one of the patches to move in its FOV. The simulation stops when the hiker agent reaches the target destination. The elevation raster file and the cost surface map are based on a 1 Arc-second (30m) resolution SRTM image, scaled down 5 times. Each patch represents a square of 150m, with an area of 0,0225km². The dataset uses a UTM Sirgas 2000 22S projection system. There are four different cost functions available to use. They change the cost surface used by the hikers to navigate around the world.

The Effectiveness of Image-Scoring Under Different Ecological Conditions

G M Leighton | Published Monday, January 06, 2014The set of models test how receivers ability to accurately rank signalers under various ecological and behavioral contexts.

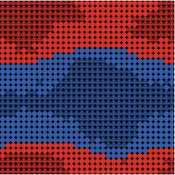

Thoughtless conformity and spread of norms in an artificial society (Grid Model)

Muhammad Azfar Nisar | Published Tuesday, May 27, 2014This model is a small extension (rectangular layout) of Joshua Epstein’s (2001) model on development of thoughtless conformity in an artificial society of agents.

Peer reviewed Lithic Raw Material Procurement and Provisioning

Jonathan Paige | Published Friday, March 06, 2015 | Last modified Thursday, March 12, 2015This model simulates the lithic raw material use and provisioning behavior of a group that inhabits a permanent base camp, and uses stone tools.

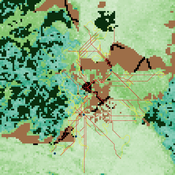

RaMDry - Rangeland Model in Drylands

Pascal Fust Eva Schlecht | Published Friday, January 05, 2018 | Last modified Friday, April 01, 2022RaMDry allows to study the dynamic use of forage ressources by herbivores in semi-arid savanna with an emphasis on effects of change of climate and management. Seasonal dynamics affects the amount and the nutritional values of the available forage.

Peer reviewed BAMERS: Macroeconomic effect of extortion

Alejandro Platas López Alejandro Guerra-Hernández | Published Monday, March 23, 2020 | Last modified Sunday, July 26, 2020Inspired by the European project called GLODERS that thoroughly analyzed the dynamics of extortive systems, Bottom-up Adaptive Macroeconomics with Extortion (BAMERS) is a model to study the effect of extortion on macroeconomic aggregates through simulation. This methodology is adequate to cope with the scarce data associated to the hidden nature of extortion, which difficults analytical approaches. As a first approximation, a generic economy with healthy macroeconomics signals is modeled and validated, i.e., moderate inflation, as well as a reasonable unemployment rate are warranteed. Such economy is used to study the effect of extortion in such signals. It is worth mentioning that, as far as is known, there is no work that analyzes the effects of extortion on macroeconomic indicators from an agent-based perspective. Our results show that there is significant effects on some macroeconomics indicators, in particular, propensity to consume has a direct linear relationship with extortion, indicating that people become poorer, which impacts both the Gini Index and inflation. The GDP shows a marked contraction with the slightest presence of extortion in the economic system.

Peer reviewed COMMONSIM: Simulating the utopia of COMMONISM

Lena Gerdes Manuel Scholz-Wäckerle Ernest Aigner Stefan Meretz Hanno Pahl Annette Schlemm Jens Schröter Simon Sutterlütti | Published Sunday, November 05, 2023This research article presents an agent-based simulation hereinafter called COMMONSIM. It builds on COMMONISM, i.e. a large-scale commons-based vision for a utopian society. In this society, production and distribution of means are not coordinated via markets, exchange, and money, or a central polity, but via bottom-up signalling and polycentric networks, i.e. ex-ante coordination via needs. Heterogeneous agents care for each other in life groups and produce in different groups care, environmental as well as intermediate and final means to satisfy sensual-vital needs. Productive needs decide on the magnitude of activity in groups for a common interest, e.g. the production of means in a multi-sectoral artificial economy. Agents share cultural traits identified by different behaviour: a propensity for egoism, leisure, environmentalism, and productivity. The narrative of this utopian society follows principles of critical psychology and sociology, complexity and evolution, the theory of commons, and critical political economy. The article presents the utopia and an agent-based study of it, with emphasis on culture-dependent allocation mechanisms and their social and economic implications for agents and groups.

Peer reviewed A financial market with zero intelligence agents

edgarkp | Published Wednesday, March 27, 2024The model’s aim is to represent the price dynamics under very simple market conditions, given the values adopted by the user for the model parameters. We suppose the market of a financial asset contains agents on the hypothesis they have zero-intelligence. In each period, a certain amount of agents are randomly selected to participate to the market. Each of these agents decides, in a equiprobable way, between proposing to make a transaction (talk = 1) or not (talk = 0). Again in an equiprobable way, each participating agent decides to speak on the supply (ask) or the demand side (bid) of the market, and proposes a volume of assets, where this number is drawn randomly from a uniform distribution. The granularity depends on various factors, including market conventions, the type of assets or goods being traded, and regulatory requirements. In some markets, high granularity is essential to capture small price movements accurately, while in others, coarser granularity is sufficient due to the nature of the assets or goods being traded

Displaying 10 of 745 results for "Jon Norberg" clear search