About the CoMSES Model Library more info

Our mission is to help computational modelers at all levels engage in the establishment and adoption of community standards and good practices for developing and sharing computational models. Model authors can freely publish their model source code in the Computational Model Library alongside narrative documentation, open science metadata, and other emerging open science norms that facilitate software citation, reproducibility, interoperability, and reuse. Model authors can also request peer review of their computational models to receive a DOI.

All users of models published in the library must cite model authors when they use and benefit from their code.

Please check out our model publishing tutorial and contact us if you have any questions or concerns about publishing your model(s) in the Computational Model Library.

We also maintain a curated database of over 7500 publications of agent-based and individual based models with additional detailed metadata on availability of code and bibliometric information on the landscape of ABM/IBM publications that we welcome you to explore.

Displaying 10 of 62 results Agent-based modelling clear search

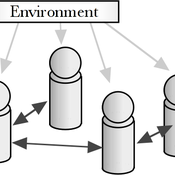

Peer reviewed An Agent-Based Model of Campaign-Based Watershed Management

Samuel Assefa Aad Kessler Luuk Fleskens | Published Monday, September 21, 2020 | Last modified Friday, June 04, 2021The model simulates the national Campaign-Based Watershed Management program of Ethiopia. It includes three agents (farmers, Kebele/ village administrator, extension workers) and the physical environment that interact with each other. The physical environment is represented by patches (fields). Farmers make decisions on the locations of micro-watersheds to be developed, participation in campaign works to construct soil and water conservation structures, and maintenance of these structures. These decisions affect the physical environment or generate model outcomes. The model is developed to explore conditions that enhance outcomes of the program by analyzing the effect on the area of land covered and quality of soil and water conservation structures of (1) enhancing farmers awareness and motivation, (2) establishing and strengthening micro-watershed associations, (3) introducing alternative livelihood opportunities, and (4) enhancing the commitment of local government actors.

Peer reviewed JuSt-Social COVID-19

Jennifer Badham | Published Thursday, June 18, 2020 | Last modified Monday, March 29, 2021NetLogo model that allows scenarios concerning general social distancing, shielding of high-risk individuals, and informing contacts when symptomatic. Documentation includes a user manual with some simple scenarios, and technical information including descriptions of key procedures and parameter values.

Peer reviewed Gregarious Behavior, Human Colonization and Social Differentiation Agent-Based Model

Sebastian Fajardo Andrés Bernal Gert Jan Hofstede Mark R Kramer Martijn de Vries | Published Thursday, August 20, 2020 | Last modified Thursday, October 29, 2020Studies of colonization processes in past human societies often use a standard population model in which population is represented as a single quantity. Real populations in these processes, however, are structured with internal classes or stages, and classes are sometimes created based on social differentiation. In this present work, information about the colonization of old Providence Island was used to create an agent-based model of the colonization process in a heterogeneous environment for a population with social differentiation. Agents were socially divided into two classes and modeled with dissimilar spatial clustering preferences. The model and simulations assessed the importance of gregarious behavior for colonization processes conducted in heterogeneous environments by socially-differentiated populations. Results suggest that in these conditions, the colonization process starts with an agent cluster in the largest and most suitable area. The spatial distribution of agents maintained a tendency toward randomness as simulation time increased, even when gregariousness values increased. The most conspicuous effects in agent clustering were produced by the initial conditions and behavioral adaptations that increased the agent capacity to access more resources and the likelihood of gregariousness. The approach presented here could be used to analyze past human colonization events or support long-term conceptual design of future human colonization processes with small social formations into unfamiliar and uninhabited environments.

Using Agent-Based Modelling and Reinforcement Learning to Study Hybrid Threats

kpadur | Published Friday, September 20, 2024Hybrid attacks coordinate the exploitation of vulnerabilities across domains to undermine trust in authorities and cause social unrest. Whilst such attacks have primarily been seen in active conflict zones, there is growing concern about the potential harm that can be caused by hybrid attacks more generally and a desire to discover how better to identify and react to them. In addressing such threats, it is important to be able to identify and understand an adversary’s behaviour. Game theory is the approach predominantly used in security and defence literature for this purpose. However, the underlying rationality assumption, the equilibrium concept of game theory, as well as the need to make simplifying assumptions can limit its use in the study of emerging threats. To study hybrid threats, we present a novel agent-based model in which, for the first time, agents use reinforcement learning to inform their decisions. This model allows us to investigate the behavioural strategies of threat agents with hybrid attack capabilities as well as their broader impact on the behaviours and opinions of other agents.

epiworldR Type: Fast Agent-Based Epi Models

George G. Vega Yon Derek Meyer | Published Monday, August 26, 2024A flexible framework for Agent-Based Models (ABM), the ‘epiworldR’ package provides methods for prototyping disease outbreaks and transmission models using a ‘C++’ backend, making it very fast. It supports multiple epidemiological models, including the Susceptible-Infected-Susceptible (SIS), Susceptible-Infected-Removed (SIR), Susceptible-Exposed-Infected-Removed (SEIR), and others, involving arbitrary mitigation policies and multiple-disease models. Users can specify infectiousness/susceptibility rates as a function of agents’ features, providing great complexity for the model dynamics. Furthermore, ‘epiworldR’ is ideal for simulation studies featuring large populations.

Team Structure and Task Performance

Davide Secchi Martin Neumann | Published Monday, August 05, 2024This model was designed to study resilience in organizations. Inspired by ethnographic work, it follows the simple goal to understand whether team structure affects the way in which tasks are performed. In so doing, it compares the ‘hybrid’ data-inspired structure with three more traditional structures (i.e. hierarchy, flexible/relaxed hierarchy, and anarchy/disorganization).

Political Participation

Didier Ruedin | Published Saturday, April 12, 2014 | Last modified Saturday, November 18, 2023Implementation of Milbrath’s (1965) model of political participation. Individual participation is determined by stimuli from the political environment, interpersonal interaction, as well as individual characteristics.

Controlling the misinformation diffusion in social media by the effect of different classes of agents

Ali Khodabandeh Yalabadi | Published Thursday, October 05, 2023An agent-based framework to simulate the diffusion process of a piece of misinformation according to the SBFC model in which the fake news and its debunking compete in a social network. Considering new classes of agents, this model is closer to reality and proposed different strategies how to mitigate and control misinformation.

A replication and extension of the Taylor's Simulation Model of Insurance Market Dynamics in C#

Rei England | Published Sunday, September 24, 2023A simple model is constructed using C# in order to to capture key features of market dynamics, while also producing reasonable results for the individual insurers. A replication of Taylor’s model is also constructed in order to compare results with the new premium setting mechanism. To enable the comparison of the two premium mechanisms, the rest of the model set-up is maintained as in the Taylor model. As in the Taylor example, homogeneous customers represented as a total market exposure which is allocated amongst the insurers.

In each time period, the model undergoes the following steps:

1. Insurers set competitive premiums per exposure unit

2. Losses are generated based on each insurer’s share of the market exposure

3. Accounting results are calculated for each insurer

…

An Agent-Based Model of an Insurance Market driven by Supply and Demand with Imperfectly Estimated Strategies in C#

Rei England | Published Sunday, September 24, 2023This is a simulation of an insurance market where the premium moves according to the balance between supply and demand. In this model, insurers set their supply with the aim of maximising their expected utility gain while operating under imperfect information about both customer demand and underlying risk distributions.

There are seven types of insurer strategies. One type follows a rational strategy within the bounds of imperfect information. The other six types also seek to maximise their utility gain, but base their market expectations on a chartist strategy. Under this strategy, market premium is extrapolated from trends based on past insurance prices. This is subdivided according to whether the insurer is trend following or a contrarian (counter-trend), and further depending on whether the trend is estimated from short-term, medium-term, or long-term data.

Customers are modelled as a whole and allocated between insurers according to available supply. Customer demand is calculated according to a logit choice model based on the expected utility gain of purchasing insurance for an average customer versus the expected utility gain of non-purchase.

Displaying 10 of 62 results Agent-based modelling clear search