About the CoMSES Model Library more info

Our mission is to help computational modelers at all levels engage in the establishment and adoption of community standards and good practices for developing and sharing computational models. Model authors can freely publish their model source code in the Computational Model Library alongside narrative documentation, open science metadata, and other emerging open science norms that facilitate software citation, reproducibility, interoperability, and reuse. Model authors can also request peer review of their computational models to receive a DOI.

All users of models published in the library must cite model authors when they use and benefit from their code.

Please check out our model publishing tutorial and contact us if you have any questions or concerns about publishing your model(s) in the Computational Model Library.

We also maintain a curated database of over 7500 publications of agent-based and individual based models with additional detailed metadata on availability of code and bibliometric information on the landscape of ABM/IBM publications that we welcome you to explore.

Displaying 10 of 131 results for "Jule Thober" clear search

This model was developed to test the usability of evolutionary computing and reinforcement learning by extending a well known agent-based model. Sugarscape (Epstein & Axtell, 1996) has been used to demonstrate migration, trade, wealth inequality, disease processes, sex, culture, and conflict. It is on conflict that this model is focused to demonstrate how machine learning methodologies could be applied.

The code is based on the Sugarscape 2 Constant Growback model, availble in the NetLogo models library. New code was added into the existing model while removing code that was not needed and modifying existing code to support the changes. Support for the original movement rule was retained while evolutionary computing, Q-Learning, and SARSA Learning were added.

IDEAL

Arika Ligmann-Zielinska | Published Thursday, August 07, 2014IDEAL: Agent-Based Model of Residential Land Use Change where the choice of new residential development in based on the Ideal-point decision rule.

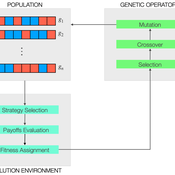

Peer reviewed Evolutionary Economic Learning Simulation: A Genetic Algorithm for Dynamic 2x2 Strategic-Form Games in Python

Vinicius Ferraz Thomas Pitz | Published Friday, April 08, 2022This project combines game theory and genetic algorithms in a simulation model for evolutionary learning and strategic behavior. It is often observed in the real world that strategic scenarios change over time, and deciding agents need to adapt to new information and environmental structures. Yet, game theory models often focus on static games, even for dynamic and temporal analyses. This simulation model introduces a heuristic procedure that enables these changes in strategic scenarios with Genetic Algorithms. Using normalized 2x2 strategic-form games as input, computational agents can interact and make decisions using three pre-defined decision rules: Nash Equilibrium, Hurwicz Rule, and Random. The games then are allowed to change over time as a function of the agent’s behavior through crossover and mutation. As a result, strategic behavior can be modeled in several simulated scenarios, and their impacts and outcomes can be analyzed, potentially transforming conflictual situations into harmony.

ICARUS - a multi-agent compliance inspection model

Slaven Smojver | Published Monday, May 09, 2022ICARUS is a multi-agent compliance inspection model (ICARUS - Inspecting Compliance to mAny RUleS). The model is applicable to environments where an inspection agency, via centrally coordinated inspections, examines compliance in organizations which must comply with multiple provisions (rules). The model (ICARUS) contains 3 types of agents: entities, inspection agency and inspectors / inspections. ICARUS describes a repeated, simultaneous, non-cooperative game of pure competition. Agents have imperfect, incomplete, asymmetric information. Entities in each move (tick) choose a pure strategy (comply/violate) for each rule, depending on their own subjective assessment of the probability of the inspection. The Inspection Agency carries out the given inspection strategy.

A more detailed description of the model is available in the .nlogo file.

Full description of the model (in line with the ODD+D protocol) and the analysis of the model (including verification, validation and sensitivity analysis) can be found in the attached documentation.

Opinion Dynamics Under Intergroup Conflict Escalation

Meysam Alizadeh Alin Coman Michael Lewis Katia Sycara | Published Friday, March 14, 2014 | Last modified Wednesday, October 29, 2014We develop an agent-based model to explore the effect of perceived intergroup conflict escalation on the number of extremists. The proposed model builds on the 2D bounded confidence model proposed by Huet et al (2008).

Simulation model replicating the five different games that were run during a workshop of the TISSS Lab

tissslab | Published Friday, April 30, 2021The three-day participatory workshop organized by the TISSS Lab had 20 participants who were academics in different career stages ranging from university student to professor. For each of the five games, the participants had to move between tables according to some pre-specified rules. After the workshop both the participant’s perception of the games’ complexities and the participants’ satisfaction with the games were recorded.

In order to obtain additional objective measures for the games’ complexities, these games were also simulated using this simulation model here. Therefore, the simulation model is an as-accurate-as-possible reproduction of the workshop games: it has 20 participants moving between 5 different tables. The rules that specify who moves when vary from game to game. Just to get an idea, Game 3 has the rule: “move if you’re sitting next to someone who is waring white or no socks”.

An exact description of the workshop games and the associated simulation models can be found in the paper “The relation between perceived complexity and happiness with decision situations: searching for objective measures in social simulation games”.

Digital divide and opinion formation

Dongwon Lim | Published Friday, November 02, 2012 | Last modified Monday, May 20, 2013This model extends the bounded confidence model of Deffuant and Weisbuch. It introduces online contexts in which a person can deliver his or her opinion to several other persons. There are 2 additional parameters accessibility and connectivity.

The Informational Dynamics of Regime Change

Dominik Klein Johannes Marx | Published Saturday, October 07, 2017 | Last modified Tuesday, January 14, 2020We model the epistemic dynamics preceding political uprising. Before deciding whether to start protests, agents need to estimate the amount of discontent with the regime. This model simulates the dynamics of group knowledge about general discontent.

Concession Forestry Modeling

Andrew Bell Rick L Riolo Jacqueline M Doremus Daniel G Brown Thomas P Lyon John Vandermeer Arun Agrawal | Published Thursday, January 23, 2014A logging agent builds roads based on the location of high-value hotspots, and cuts trees based on road access. A forest monitor sanctions the logger on observed infractions, reshaping the pattern of road development.

How to not get stuck – an ant model showing how negative feedback due to crowding maintains flexibility in ant foraging

Tomer Czaczkes | Published Thursday, December 17, 2015Positive feedback can lead to “trapping” in local optima. Adding a simple negative feedback effect, based on ant behaviour, prevents this trapping

Displaying 10 of 131 results for "Jule Thober" clear search