About the CoMSES Model Library more info

Our mission is to help computational modelers at all levels engage in the establishment and adoption of community standards and good practices for developing and sharing computational models. Model authors can freely publish their model source code in the Computational Model Library alongside narrative documentation, open science metadata, and other emerging open science norms that facilitate software citation, reproducibility, interoperability, and reuse. Model authors can also request peer review of their computational models to receive a DOI.

All users of models published in the library must cite model authors when they use and benefit from their code.

Please check out our model publishing tutorial and contact us if you have any questions or concerns about publishing your model(s) in the Computational Model Library.

We also maintain a curated database of over 7500 publications of agent-based and individual based models with additional detailed metadata on availability of code and bibliometric information on the landscape of ABM/IBM publications that we welcome you to explore.

Displaying 10 of 247 results for "Yue Dou" clear search

Product Diffusion Model in an Advance Selling Strategy

Peng Shao | Published Tuesday, March 15, 2016 | Last modified Tuesday, March 15, 2016the model can be used to describe the product diffusion in an Advance Selling Strategy. this model takes into account the consumers product adoption, and describe consumer’s online behavior based on four states.

Direct versus Connect

Steven Kimbrough | Published Sunday, January 15, 2023This NetLogo model is an implementation of the mostly verbal (and graphic) model in Jarret Walker’s Human Transit: How Clearer Thinking about Public Transit Can Enrich Our Communities and Our Lives (2011). Walker’s discussion is in the chapter “Connections or Complexity?”. See especially figure 12-2, which is on page 151.

In “Connections or Complexity?”, Walker frames the matter as involving a choice between two conflicting goals. The first goal is to minimize connections, the need to make transfers, in a transit system. People naturally prefer direct routes. The second goal is to minimize complexity. Why? Well, read the chapter, but as a general proposition we want to avoid unnecessary complexity with its attendant operating characteristics (confusing route plans in the case of transit) and management and maintenance challenges. With complexity general comes degraded robustness and resilience.

How do we, how can we, choose between these conflicting goals? The grand suggestion here is that we only choose indirectly, implicitly. In the present example of connections versus complexity we model various alternatives and compare them on measures of performance (MoP) other than complexity or connections per se. The suggestion is that connections and complexity are indicators of, heuristics for, other MoPs that are more fundamental, such as cost, robustness, energy use, etc., and it is these that we at bottom care most about. (Alternatively, and not inconsistently, we can view connections and complexity as two of many MoPs, with the larger issue to be resolve in light of many MoPs, including but not limited to complexity and connections.) We employ modeling to get a handle on these MoPs. Typically, there will be several, taking us thus to a multiple criteria decision making (MCDM) situation. That’s the big picture.

Eco-Evolutionary Pathways Toward Industrial Cities

Handi Chandra Putra | Published Thursday, May 21, 2020Industrial location theory has not emphasized environmental concerns, and research on industrial symbiosis has not emphasized workforce housing concerns. This article brings jobs, housing, and environmental considerations together in an agent-based model of industrial

and household location. It shows that four classic outcomes emerge from the interplay of a relatively small number of explanatory factors: the isolated enterprise with commuters; the company town; the economic agglomeration; and the balanced city.

Peer reviewed A Simple Agent-Based Spatial Model of the Economy: Tools for Policy

Bernardo Furtado Isaque Daniel Rocha Eberhardt | Published Tuesday, July 05, 2022This study simulates the evolution of artificial economies in order to understand the tax relevance of administrative boundaries in the quality of life of its citizens. The modeling involves the construction of a computational algorithm, which includes citizens, bounded into families; firms and governments; all of them interacting in markets for goods, labor and real estate. The real estate market allows families to move to dwellings with higher quality or lower price when the families capitalize property values. The goods market allows consumers to search on a flexible number of firms choosing by price and proximity. The labor market entails a matching process between firms (given its location) and candidates, according to their qualification. The government may be configured into one, four or seven distinct sub-national governments, which are all economically conurbated. The role of government is to collect taxes on the value added of firms in its territory and invest the taxes into higher levels of quality of life for residents. The results suggest that the configuration of administrative boundaries is relevant to the levels of quality of life arising from the reversal of taxes. The model with seven regions is more dynamic, but more unequal and heterogeneous across regions. The simulation with only one region is more homogeneously poor. The study seeks to contribute to a theoretical and methodological framework as well as to describe, operationalize and test computer models of public finance analysis, with explicitly spatial and dynamic emphasis. Several alternatives of expansion of the model for future research are described. Moreover, this study adds to the existing literature in the realm of simple microeconomic computational models, specifying structural relationships between local governments and firms, consumers and dwellings mediated by distance.

01a ModEco V2.05 – Model Economies – In C++

Garvin Boyle | Published Monday, February 04, 2013 | Last modified Friday, April 14, 2017Perpetual Motion Machine - A simple economy that operates at both a biophysical and economic level, and is sustainable. The goal: to determine the necessary and sufficient conditions of sustainability, and the attendant necessary trade-offs.

05 CmLab V1.17 – Conservation of Money Laboratory

Garvin Boyle | Published Saturday, April 15, 2017In CmLab we explore the implications of the phenomenon of Conservation of Money in a modern economy. This is one of a series of models exploring the dynamics of sustainable economics – PSoup, ModEco, EiLab, OamLab, MppLab, TpLab, CmLab.

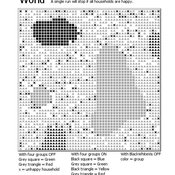

Introducing two extensions of Schelling's segregation model

Andreas Flache Carlos A. de Matos Fernandes | Published Monday, January 25, 2021Schelling famously proposed an extremely simple but highly illustrative social mechanism to understand how strong ethnic segregation could arise in a world where individuals do not necessarily want it. Schelling’s simple computational model is the starting point for our extensions in which we build upon Wilensky’s original NetLogo implementation of this model. Our two NetLogo models can be best studied while reading our chapter “Agent-based Computational Models” (Flache and de Matos Fernandes, 2021). In the chapter, we propose 10 best practices to elucidate how agent-based models are a unique method for providing and analyzing formally precise, and empirically plausible mechanistic explanations of puzzling social phenomena, such as segregation, in the social world. Our chapter addresses in particular analytical sociologists who are new to ABMs.

In the first model (SegregationExtended), we build on Wilensky’s implementation of Schelling’s model which is available in NetLogo library (Wilensky, 1997). We considerably extend this model, allowing in particular to include larger neighborhoods and a population with four groups roughly resembling the ethnic composition of a contemporary large U.S. city. Further features added concern the possibility to include random noise, and the addition of a number of new outcome measures tuned to highlight macro-level implications of the segregation dynamics for different groups in the agent society.

In SegregationDiscreteChoice, we further modify the model incorporating in particular three new features: 1) heterogeneous preferences roughly based on empirical research categorizing agents into low, medium, and highly tolerant within each of the ethnic subgroups of the population, 2) we drop global thresholds (%-similar-wanted) and introduce instead a continuous individual-level single-peaked preference function for agents’ ideal neighborhood composition, and 3) we use a discrete choice model according to which agents probabilistically decide whether to move to a vacant spot or stay in the current spot by comparing the attractiveness of both locations based on the individual preference functions.

…

Peer reviewed Monogamous Reproduction in Small Populations and the Enforcement of the Incest Taboo

Ian Stuart | Published Wednesday, January 18, 2023This program was developed to simulate monogamous reproduction in small populations (and the enforcement of the incest taboo).

Every tick is a year. Adults can look for a mate and enter a relationship. Adult females in a Relationship (under the age of 52) have a chance to become pregnant. Everyone becomes not alive at 77 (at which point people are instead displayed as flowers).

User can select a starting-population. The starting population will be adults between the ages of 18 and 42.

…

Peer reviewed Evolution of Ecological Communities: Testing Constraint Closure

Steve Peck | Published Sunday, December 06, 2020 | Last modified Friday, April 16, 2021Ecosystems are among the most complex structures studied. They comprise elements that seem both stable and contingent. The stability of these systems depends on interactions among their evolutionary history, including the accidents of organisms moving through the landscape and microhabitats of the earth, and the biotic and abiotic conditions in which they occur. When ecosystems are stable, how is that achieved? Here we look at ecosystem stability through a computer simulation model that suggests that it may depend on what constrains the system and how those constraints are structured. Specifically, if the constraints found in an ecological community form a closed loop, that allows particular kinds of feedback may give structure to the ecosystem processes for a period of time. In this simulation model, we look at how evolutionary forces act in such a way these closed constraint loops may form. This may explain some kinds of ecosystem stability. This work will also be valuable to ecological theorists in understanding general ideas of stability in such systems.

Peer reviewed The Indus Village's Weather model: procedural generation of daily weather

Andreas Angourakis | Published Tuesday, May 13, 2025Overview

The Weather model is a procedural generation model designed to create realistic daily weather data for socioecological simulations. It generates synthetic weather time series for solar radiation, temperature, and precipitation using algorithms based on sinusoidal and double logistic functions. The model incorporates stochastic variation to mimic unpredictable weather patterns and aims to provide realistic yet flexible weather inputs for exploring diverse climate scenarios.

The Weather model can be used independently or integrated into larger models, providing realistic weather patterns without extensive coding or data collection. It can be customized to meet specific requirements, enabling users to gain a better understanding of the underlying mechanisms and have greater confidence in their applications.

…

Displaying 10 of 247 results for "Yue Dou" clear search