About the CoMSES Model Library more info

Our mission is to help computational modelers at all levels engage in the establishment and adoption of community standards and good practices for developing and sharing computational models. Model authors can freely publish their model source code in the Computational Model Library alongside narrative documentation, open science metadata, and other emerging open science norms that facilitate software citation, reproducibility, interoperability, and reuse. Model authors can also request peer review of their computational models to receive a DOI.

All users of models published in the library must cite model authors when they use and benefit from their code.

Please check out our model publishing tutorial and contact us if you have any questions or concerns about publishing your model(s) in the Computational Model Library.

We also maintain a curated database of over 7500 publications of agent-based and individual based models with additional detailed metadata on availability of code and bibliometric information on the landscape of ABM/IBM publications that we welcome you to explore.

Displaying 10 of 10 results peer review clear search

Exploring Pesticide use and Inter-row management in European Vineyards and their potential Impacts (EPIEVI)

Yang Chen Nina Schwarz | Published Tuesday, January 24, 2023The purpose of this study is to explore the potential impacts of pesticide use and inter-row management of European winegrowers in response to policy designs and climate change. Pesticides considered in this study include insecticides, pheromone dispensers (as an alternative to insecticides), fungicides (both the synthetic type and copper-sulphur based). Inter-row management concerns the arrangement of vegetation in the inter-rows and the type of vegetation.

Open Peer Review Model

Federico Bianchi | Published Monday, May 24, 2021This is an agent-based model of a population of scientists alternatively authoring or reviewing manuscripts submitted to a scholarly journal for peer review. Peer-review evaluation can be either ‘confidential’, i.e. the identity of authors and reviewers is not disclosed, or ‘open’, i.e. authors’ identity is disclosed to reviewers. The quality of the submitted manuscripts vary according to their authors’ resources, which vary according to the number of publications. Reviewers can assess the assigned manuscript’s quality either reliably of unreliably according to varying behavioural assumptions, i.e. direct/indirect reciprocation of past outcome as authors, or deference towards higher-status authors.

The PRIF Model

Davide Secchi | Published Friday, November 08, 2019This model takes into consideration Peer Reviewing under the influence of Impact Factor (PRIF) and it has the purpose to explore whether the infamous metric affects assessment of papers under review. The idea is to consider to types of reviewers, those who are agnostic towards IF (IU1) and those that believe that it is a measure of journal (and article) quality (IU2). This perception is somehow reflected in the evaluation, because the perceived scientific value of a paper becomes a function of the journal in which an article has been submitted. Various mechanisms to update reviewer preferences are also implemented.

Peer review model with heterogeneous grade language

Thomas Feliciani Ramanathan Moorthy Pablo Lucas Kalpana Shankar | Published Thursday, May 07, 2020This ABM re-implements and extends the simulation model of peer review described in Squazzoni & Gandelli (Squazzoni & Gandelli, 2013 - doi:10.18564/jasss.2128) (hereafter: ‘SG’). The SG model was originally developed for NetLogo and is also available in CoMSES at this link.

The purpose of the original SG model was to explore how different author and reviewer strategies would impact the outcome of a journal peer review system on an array of dimensions including peer review efficacy, efficiency and equality. In SG, reviewer evaluation consists of a continuous variable in the range [0,1], and this evaluation scale is the same for all reviewers. Our present extension to the SG model allows to explore the consequences of two more realistic assumptions on reviewer evaluation: (1) that the evaluation scale is discrete (e.g. like in a Likert scale); (2) that there may be differences among their interpretation of the grades of the evaluation scale (i.e. that the grade language is heterogeneous).

Peer Review Game

Federico Bianchi Francisco Grimaldo Giangiacomo Bravo Flaminio Squazzoni | Published Monday, April 30, 2018NetLogo software for the Peer Review Game model. It represents a population of scientists endowed with a proportion of a fixed pool of resources. At each step scientists decide how to allocate their resources between submitting manuscripts and reviewing others’ submissions. Quality of submissions and reviews depend on the amount of allocated resources and biased perception of submissions’ quality. Scientists can behave according to different allocation strategies by simply reacting to the outcome of their previous submission process or comparing their outcome with published papers’ quality. Overall bias of selected submissions and quality of published papers are computed at each step.

PR-M: The Peer Review Model

Francisco Grimaldo Mario Paolucci | Published Sunday, November 10, 2013 | Last modified Wednesday, July 01, 2015This is an agent-based model of peer review built on the following three entities: papers, scientists and conferences. The model has been implemented on a BDI platform (Jason) that allows to perform both parameter and mechanism exploration.

Perceived Scientific Value and Impact Factor

Davide Secchi Stephen J Cowley | Published Wednesday, April 12, 2017 | Last modified Monday, January 29, 2018The model explores the impact of journal metrics (e.g., the notorious impact factor) on the perception that academics have of an article’s scientific value.

Peer Review Model

Flaminio Squazzoni Claudio Gandelli | Published Wednesday, September 05, 2012 | Last modified Saturday, April 27, 2013This model looks at implications of author/referee interaction for quality and efficiency of peer review. It allows to investigate the importance of various reciprocity motives to ensure cooperation. Peer review is modelled as a process based on knowledge asymmetries and subject to evaluation bias. The model includes various simulation scenarios to test different interaction conditions and author and referee behaviour and various indexes that measure quality and efficiency of evaluation […]

Peer Review with Multiple Reviewers

Federico Bianchi Flaminio Squazzoni | Published Thursday, September 10, 2015This ABM looks at the effect of multiple reviewers and their behavior on the quality and efficiency of peer review. It models a community of scientists who alternatively act as “author” or “reviewer” at each turn.

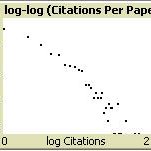

Citation Agents: A model of collective learning through scientific publication

Christopher Watts | Published Friday, July 31, 2015Simulates the construction of scientific journal publications, including authors, references, contents and peer review. Also simulates collective learning on a fitness landscape. Described in: Watts, Christopher & Nigel Gilbert (forthcoming) “Does cumulative advantage affect collective learning in science? An agent-based simulation”, Scientometrics.